The Chain Rule: The Math Powering Neural Networks

Backpropagation enables neural network performance, and the chain rule is its core mechanism. This post explains its critical role in building effective models.

The Puzzle of Neural Networks

Neural networks predict trends, personalize recommendations, and detect fraud with high accuracy. However, the underlying math can challenge teams. For example, a data scientist spent weeks adjusting a recommendation model, only to see predictions drop by 10% due to misaligned gradients.

The issue was a weak understanding of backpropagation’s foundation: the chain rule.

This mathematical tool enables neural networks to learn, guiding teams to reliable results. Without it, models fail, projects delay, and stakeholders grow frustrated.

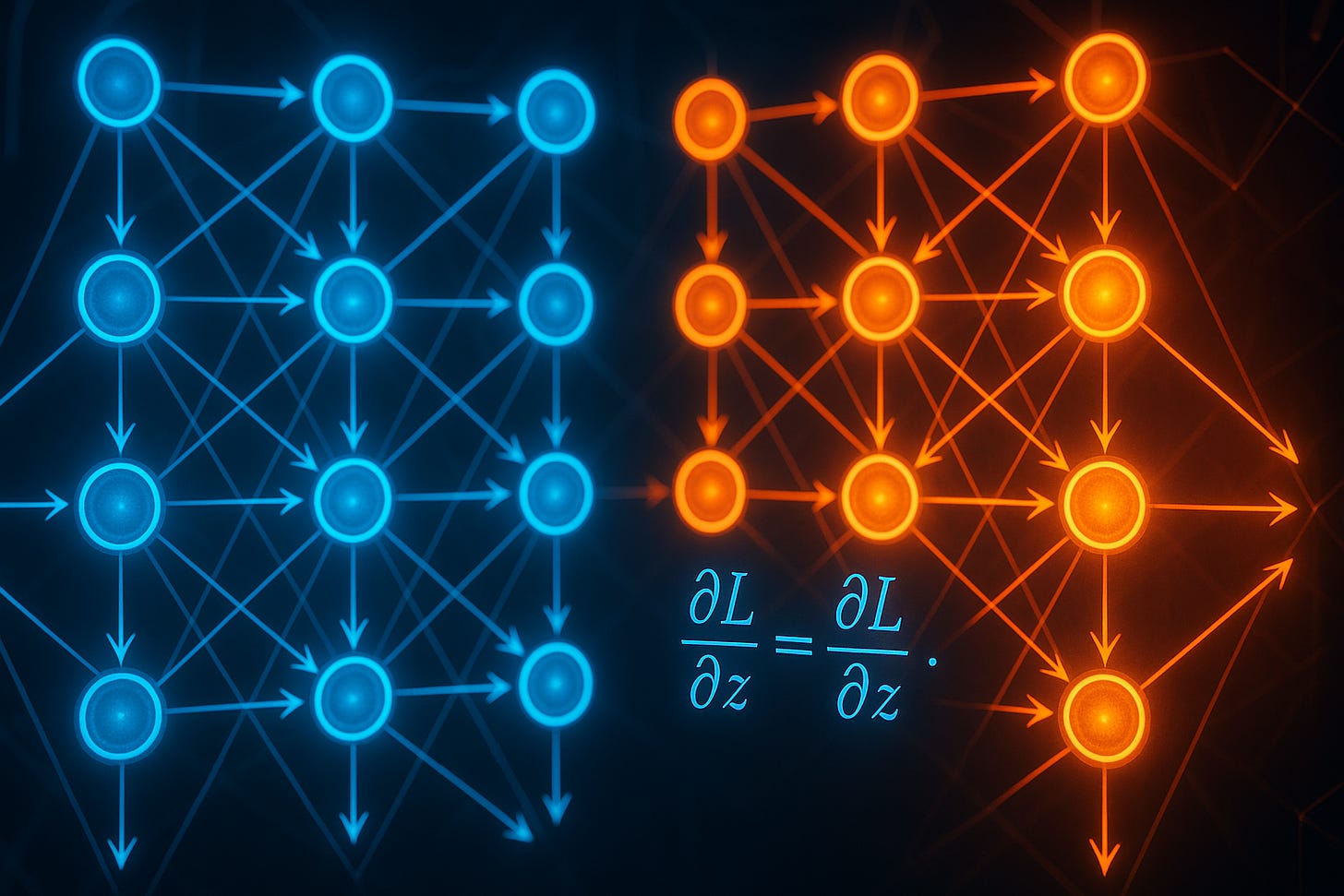

Understanding the Chain Rule in Backpropagation

Backpropagation adjusts weights to reduce errors, similar to tuning an instrument for accuracy. The chain rule connects each layer’s contribution to the final error. In a retail model predicting customer churn, data flo

ws through layers to produce an output.

If the prediction is inaccurate, backpropagation calculates the error and uses the chain rule to adjust weights backward, layer by layer.

Challenges in Applying the Chain Rule

The chain rule is straightforward in theory but complex in practice. Gradients can vanish (approach zero) or explode (grow excessively), disrupting learning. Vanishing gradients slow or stop weight updates, while exploding gradients cause unstable adjustments. Data noise can also skew gradients, leading to inaccurate predictions.

To address these issues:

Use ReLU or Leaky ReLU activations to maintain gradient flow.

Apply gradient clipping to limit extreme values.

Preprocess data to ensure clean gradients.

Use stable optimizers like Adam or RMSprop for balanced updates.

Balancing the chain rule’s precision with practical challenges, such as noisy data or complex architectures, requires careful tuning and validation.

Mastering the Chain Rule:

Effective use of the chain rule involves mapping the network’s data flow to understand how weights influence the error.

Visualizing gradients ensures each layer learns correctly. Tools like PyTorch or TensorFlow simplify gradient calculations, but understanding the chain rule’s mechanics is essential for informed adjustments.

Building Effective Models

The chain rule enables precise weight adjustments, leading to models that deliver accurate predictions. By aligning technical adjustments with desired outcomes, teams create impactful solutions.

The chain rule transforms complex optimization into manageable steps, driving reliable results.

The Future of Neural Networks

The chain rule in backpropagation powers effective neural networks. Mastering it ensures models optimize efficiently, turning data into actionable insights.

Experiment and Collaborate

Explore a neural network project and test how the chain rule refines weights to improve predictions. Adjust a parameter, such as the learning rate or activation function, and observe its effect. Share your findings or challenges in the comments to exchange ideas and learn together.

Share your results or challenges in the comments below—let’s learn from each others

Ready to dive deeper? Subscribe for more insights on mastering strategy and driving impact.

🧠Dive deeper with my latest posts on Medium and explore BrainScript, packed with Product, AI, and Frontend Development insights.

🔗 Connect with me on LinkedIn for exclusive takes and updates.