Unlocking Binary Decisions: The Hidden Power of Logistic Regression in Modern AI

Ever wondered why data scientists still reach for logistic regression—despite all the hype around neural networks? Discover the business-critical scenarios where this “old school” algorithm outperform

Why Logistic Regression Refuses to Die—A Deep Dive for Smart Decision-Makers

Ask any data scientist what powers fraud detection, customer churn prediction, or risk modeling in Fortune 500 firms, and you’ll hear about every tool from random forests to convolutional nets.

Yet, when reliability, speed, interpretability, and business alignment matter most, logistic regression keeps coming up. Why is this?

Too many blog posts rehash textbook math, but here are the high-value angles insiders focus on—and why it matters for you, whether you’re building, buying, or trusting a binary classifier.

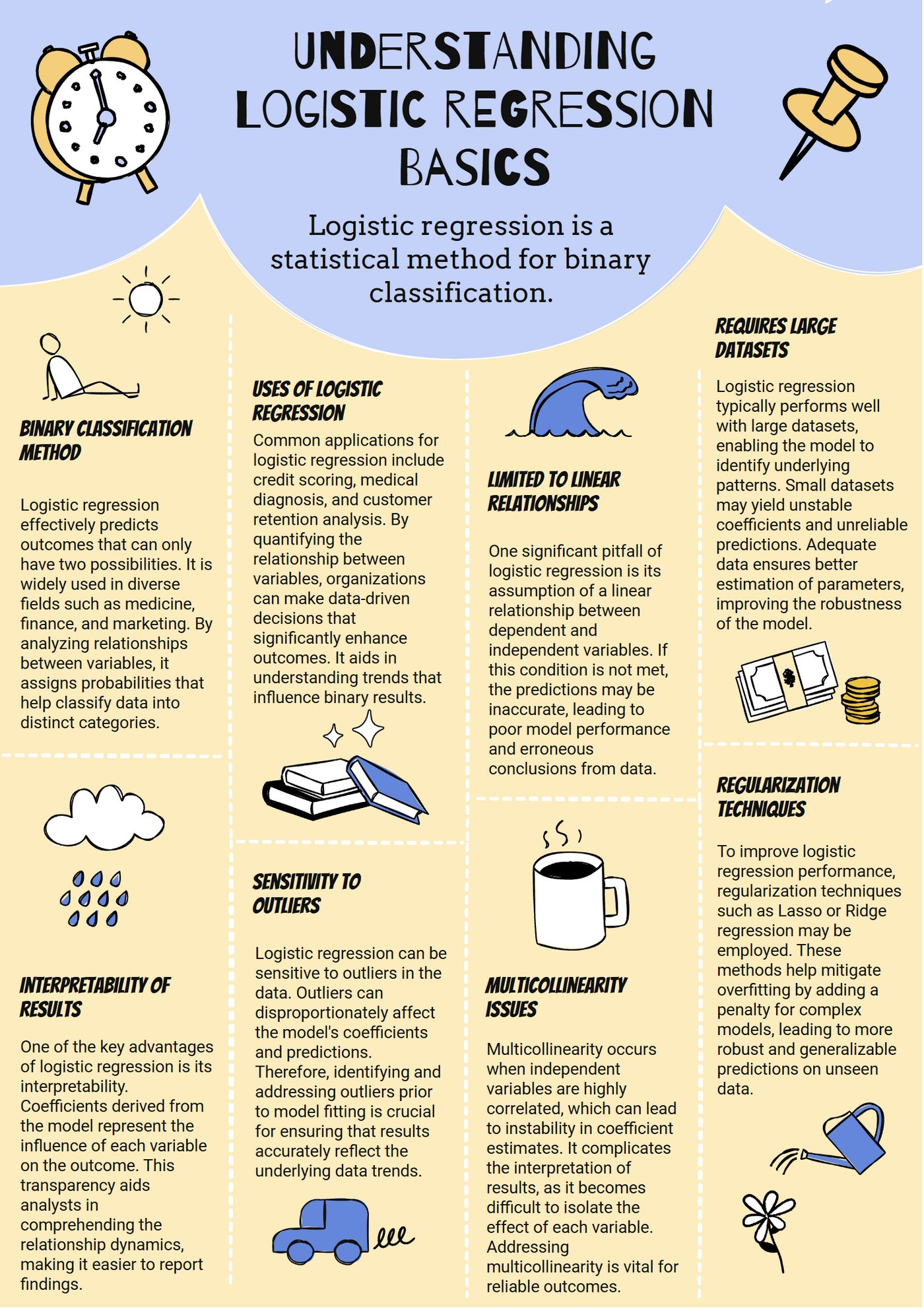

What Everyone Gets Wrong About Logistic Regression

It’s Not Just “Simple”: Most teams use logistic regression as a baseline. The truth: advanced feature engineering and regularization make it a powerful risk model—even in fintech, insurance, or health—where raw accuracy can’t beat transparency.

You Can Outperform Deep Learning on Tabular Data: For highly structured, tabular data, logistic regression is remarkably competitive. Neural networks shine only with massive data or complex features (images, text).

Mistuning the Cutoff Wrecks Business Value: Using the default 0.5 threshold can cost millions if your classes are imbalanced or if the real-world cost of errors isn’t symmetrical.

Practical Use Cases: Where Logistic Regression “Wins”

Regulatory Environments: Banks and hospitals trust logistic regression since every prediction—why a loan was declined, why a patient got flagged high risk—can be explained line-by-line. Auditors love it.

Early-Stage Product Analytics: When data is sparse, you can get actionable “first signals” without vast data or compute power.

Feature Importance for Business Decisions: The model coefficients show what moves the needle, in plain English, for non-technical stakeholders.

Hidden Pitfalls…and Pro-level Fixes

Dummy Variables Without Proper Encoding: Categorical features can mislead the algorithm, causing “ghost” influences unless one-hot encoded or dropped properly.

Ignoring Regularization: Overfitting is a real risk in medium-sized datasets. Use L1/L2 penalties to keep things honest.

Blindly Trusting the Probability Output: The model predicts likelihood—NOT certainty. And if your inputs aren’t scaled, probabilities will be off.

Business-critical Tips for Maximizing ROI

Calibrate the Cutoff for Loss Minimization: Don’t just use 0.5—analyze your confusion matrix and set the threshold where your business saves/earns most.

Interpret Feature Coefficients Strategically: Use odds ratios to translate technical findings into actionable management levers.

Combine Logistic Regression with Ensemble Models: Don’t be afraid to blend it for robustness in production—logistic regression often acts as a “ sanity check” for more complex models.

The Ultimate Deep Dive: What Makes Logistic Regression Invaluable Today?

It’s fast. It’s transparent. It predicts probability, not just yes or no. For product leaders, regulatory teams, and innovation labs, this means:

Rapid iteration and deployment cycles.

Built-in explainability for compliance and trust.

Empowered decisions for operations, marketing, and risk teams—without needing ML PhDs.

Ready to dive deeper? Subscribe for more insights on mastering strategy and driving impact.

🧠Dive deeper with my latest posts on Medium and explore BrainScript, packed with Product, AI, and Frontend Development insights.

🔗 Connect with me on LinkedIn for exclusive takes and updates.